- Services

- Industries

- Automotive

- Battery

- Building inspection

- Fire alarms system testing

- Household appliances

- Installation materials

- Industrial machinery

- IT & audio video

- Laboratory, test & measurement

- Lighting equipment

- Maritime, oil & gas

- Medical & healthcare equipment

- Military & aerospace product testing

- Wireless & telecom

- Resources

- About

- Blog

- Events

March 15, 2024

Unpacking the EU AI Act: A Milestone in AI Regulation

Written by: Mónica Fernández Peñalver

In a significant step towards regulating artificial intelligence (AI), the European Union (EU) has provisionally agreed on the AI Act (AIA), setting a precedent as the first comprehensive legal framework for AI by a major global economy. Expected to become law in the first half of 2024, the AIA introduces a prescriptive, risk-based approach to manage single-purpose and general-purpose AI systems, aiming at safeguarding individuals' rights and societal values.

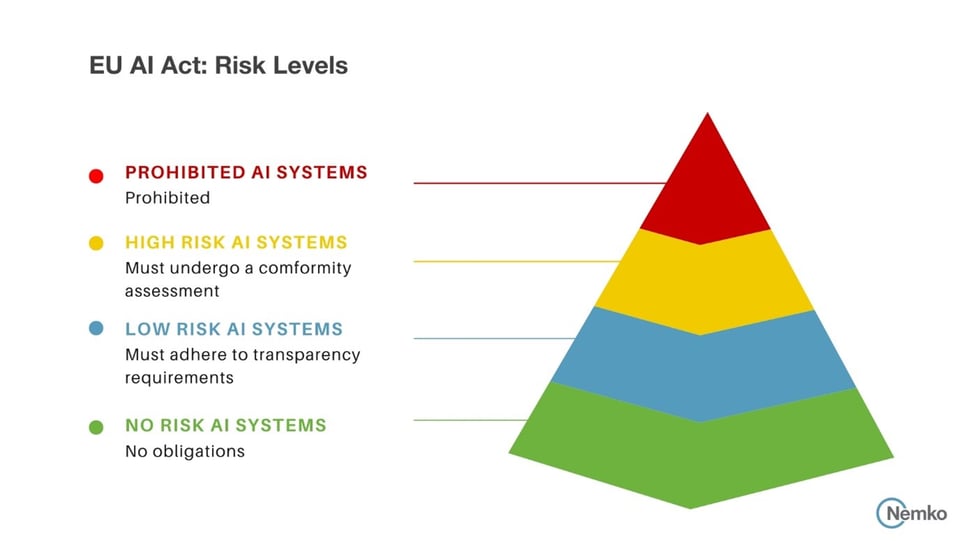

The AIA's Risk-Based Regulatory Approach

The AIA distinguishes AI systems based on their risk levels to human rights, health, safety, and societal impacts. It outright bans AI systems considered to pose unacceptable risks, while high-risk AI systems can be deployed under stringent conditions. The Act focuses on high-risk applications, ensuring that critical areas such as healthcare, law enforcement, and employment are subject to stringent requirements, promoting trust and safety in their development and deployment in the EU market. Moreover, given their system risk profile, the Act subjects all general-purpose AI (GPAI) models to transparency requirements, with high-impact GPAI models facing even more rigorous obligations.

Overview of AI risk levels as defined by the EU AI Act

.jpg?width=1920&height=1080&name=Your%20Text%20Here%20(11).jpg)

Non-exhaustive list of examples of AI systems categorized by level and type of risk in the EU AI Act.

Extraterritorial Implications

The AIA's reach extends beyond the EU, affecting organizations worldwide that market or use AI systems within the Union. This extraterritorial scope compels multinational corporations to adapt, possibly reevaluating their global AI strategies to comply with the AIA or risk scaling back their AI use in the EU market. This former scenario may prompt innovation in AI governance practices, pushing firms to prioritize ethical considerations and transparency in their AI systems, setting a new global standard for AI development and deployment.

Operational and Compliance Considerations

The Act forces organizations to undertake a thorough examination of their AI systems, identifying those impacted by the new regulations and assessing necessary compliance measures. This process involves not only technical adjustments but also a shift in corporate culture towards greater accountability in AI usage. The emphasis on documentation, risk assessment, and transparency throughout an AI system's lifecycle encourages a more conscious and responsible approach to AI development.

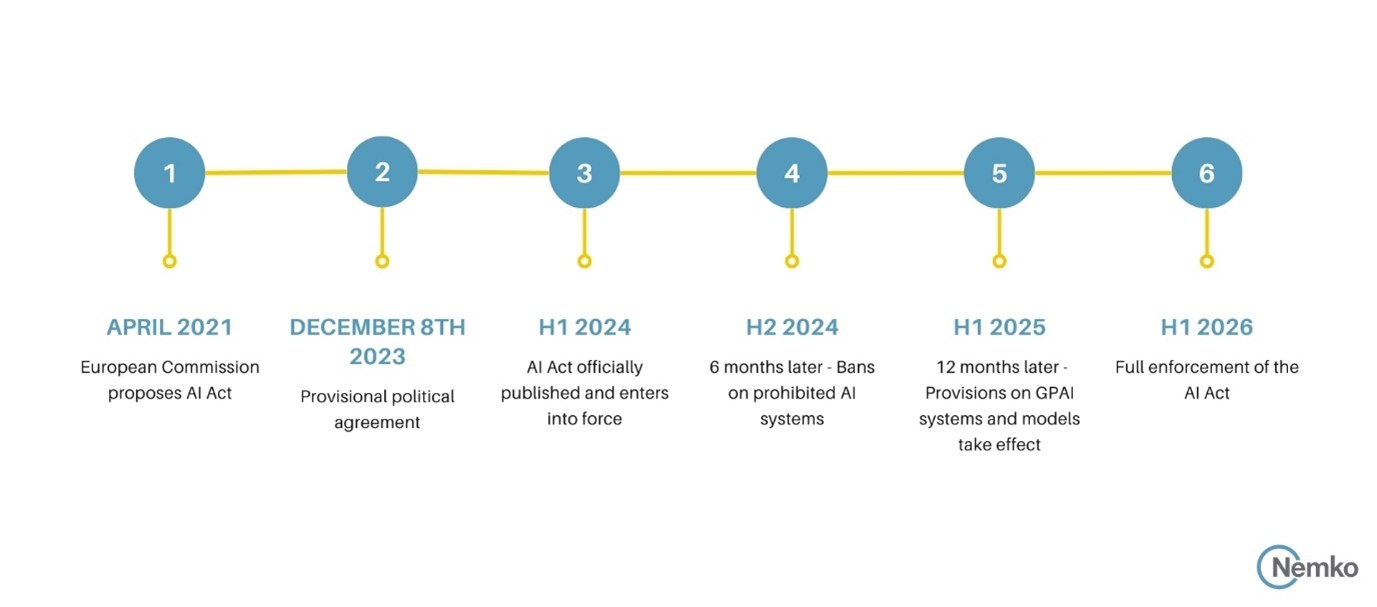

Timeline and Implementation Phases

Following a prolonged negotiation phase since its proposal in April 2021, the political agreement on the AIA marks a pivotal advancement towards its enactment. Organizations will have a two-year window after the act enters into force to ensure compliance, with specific provisions taking effect earlier. Bans on prohibited AI systems will take effect 6 months after the AIA’s entry into force, followed by provision on GPAI systems and models that will take affect 12 months after the AIA’s entry into force.

AI Act timeline

Detailed provisions

Deifinition

The AIA aims to align its definition of AI systems with that of the Organization for Economic Cooperation and Development (OECD), though the precise wording is pending. This definition is crucial for distinguishing AI from simpler software systems and ensuring a proportionate regulatory scope. This clarity is important for both regulators and the regulated entities, facilitating compliance and enforcement.

Assigning Roles in the AI Value Chain

The AIA clarifies the responsibilities of various stakeholders in the AI value chain, including providers, importers, distributors, and deployers. Understanding these roles is essential for compliance, especially for primary actors like AI system providers and deployers. Such a structured approach to accountability is crucial for building a responsible AI landscape, where each participant contributes to the overarching goal of using AI ethically and responsibly.

Key requirements for High-Risk AI Systems

The Act categorizes AI systems by their potential risks, emphasizing a balance between innovation and safety. High-risk systems are subject to strict compliance standards, including performing conformity assessments, and registering to the new EU AI database. These requirements underscore the need for robust governance and risk management frameworks. Deployers of high-risk AI systems also have to conduct a Fundamental Rights Impact Assessments (FRIA), assessing AI's potential impacts on privacy, non-discrimination, and freedom of expression. This requirement underscores the Act's commitment to fundamental rights protection.

Proportionality and SME Support

Recognizing the burden of compliance, the AIA includes measures to alleviate impacts on small and medium-sized enterprises (SMEs), such as regulatory sandboxes and prioritized access to innovation-promoting environments. These initiatives aim to foster AI development while ensuring regulatory alignment.

Global Impact

The AIA sets a new benchmark for AI regulation with implications for firms worldwide. Organizations must navigate the Act's requirements, adapting their AI strategies and operations to align with EU standards and anticipating the broader impact on global AI governance. The AI Act could well become a template for international cooperation in the regulation of AI, promoting a global tech ecosystem that is both innovative and responsible.

Conclusion

The EU AI Act represents a critical juncture in AI regulation, providing a structured framework to ensure AI's ethical and safe deployment. As organizations prepare for its implementation, strategic foresight and adaptability will be key to navigating the evolving AI regulatory landscape, both within the EU and globally.

Nemko, renowned for its proficiency in testing, inspection, and certification services, stands ready to guide organizations through the intricate requirements of the EU AI Act. Offering a suite of services including gap analysis, consulting, and training, Nemko is equipped to aid businesses in effectively implementing the EU AI Act's requirements and using AI in an ethical and responsible manner.

Contact us

For entities eager to adopt AI within the stringent ethical and responsible frameworks, collaborating with Nemko presents a straightforward path to compliance. Reach out to discover how Nemko can facilitate your journey towards embracing regulatory and ethical guidelines, maximizing AI's potential in your operations.

Mónica Fernández Peñalver

Mónica has actively been involved in projects that advocate for and advance Responsible AI through research, education, and policy. Before joining Nemko, she dedicated herself to exploring the ethical, legal, and social challenges of AI fairness for the detection and mitigation of bias. She holds a master’s degree in...